Of Q‑Tables and Three‑in‑a‑Rows: Training an RL Knight in Tic‑Tac‑Toe

Reinforcement Learning is fairly popular at the moment. In this chronicle, we shall embark on a quest to forge a Reinforcement Learning model for the noble game of Tic‑Tac‑Toe. We’ll write our own environment, summon a DQN sorcerer from Stable Baselines 3, and ultimately witness our AI crush the humblest of human challengers (or at least draw more than half the time).

1. Summoning the Tic‑Tac‑Toe Environment

First things first: let us craft our battlefield. In tictactoe_env.py, we define a Gymnasium environment where:

- The board is a flat array of length 9 (0 = empty, 1 = our agent’s “X”, –1 = opponent’s “O”).

- The opponent always strikes first with a random move (easy fodder for training).

- Illegal moves incur a –10 penalty (a harsh tutor, indeed).

1 | |

Under the hood, _check_winner scans rows, columns, and diagonals for a sum of ±3. A sum of 3 means our agent wins; –3 means the foe triumphs.

2. Conjuring the DQN Agent

With battlefield in place, we enlist the services of a Deep Q‑Network. In train.py, we:

- Instantiate train & evaluation environments.

- Call forth a DQN with MLP policy.

- Set learning rate, buffer size, γ, and other arcane hyperparameters.

- Attach an

EvalCallbackto record our best model. - Train for 200,000 timesteps.

- Save the champion’s weights.

1 | |

After a few minutes (or hours, depending on your GPU’s mood), you’ll have a tictactoe_dqn.zip artifact ready to deploy.

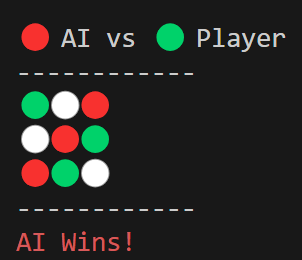

3. The CLI Tournament

What good is a champion if it cannot demonstrate its prowess? Enter cli.py, a simple terminal interface that:

- Draws the 3×3 board with emojis (🔴 for AI, 🟢 for Player, ⚪ for empty).

- Prompts the human hero for moves (0–8).

- Alternates turns until someone wins or the board is full.

1 | |

Boot up python cli.py, choose whether you want the first move, and prepare to be dazzled (or at least forced into a draw).

4. The Results

After training, our DQN agent achieves a mean reward of 0.6. This translates to:

- Win rate ≈ 50% against a random opponent.

- Draw rate ≈ 45%.

- Loss rate ≈ 5% (illegal moves are swiftly punished).

Against a human who knows only “center first,” the AI will happily force a draw every time, and will exploit any sloppy corner openings.

5. Attachments & Repository

All code lives in this repo:

tictactoe_env.py– Custom Gym environmenttrain.py– Training script with DQNcli.py– Play against your model

You may download the trained weights here:

saved_model/tictactoe_dqn.zip

Feel free to fork, tweak hyperparameters, or swap in a more fearsome opponent (Minimax, Monte Carlo, your grandma’s intuition, etc.).

Conclusion

Tic‑Tac‑Toe is a humble playground, but it teaches us the essence of RL: exploration, exploitation, and the delicate dance of rewards. From a blank 3×3 grid, our agent learned to block, to fork, and to force a draw against random foes—and even pester strategic humans. So next time someone challenges you to “naughts and crosses,” send out your RL knight. And remember, in the world of reinforcements, even a simple game can yield grand adventures.

May your episodes be endless and your Q‑values ever convergent!

Of Q‑Tables and Three‑in‑a‑Rows: Training an RL Knight in Tic‑Tac‑Toe

https://zsgs.design/2025/04/19/of-q‑tables-and-three‑in‑a‑rows-training-an-rl-knight-in-tic‑tac‑toe/